Each semester, several students follow the same procedure before they add classes to their cart: Google the course’s instructor, visit the RateMyProfessors page and scan for comments like “get ready to read” or “amazing lectures.” It’s quick and public, and the desire to know what you’re getting yourself into seems to be the nature of today’s students. But how much weight should students really give these ratings?

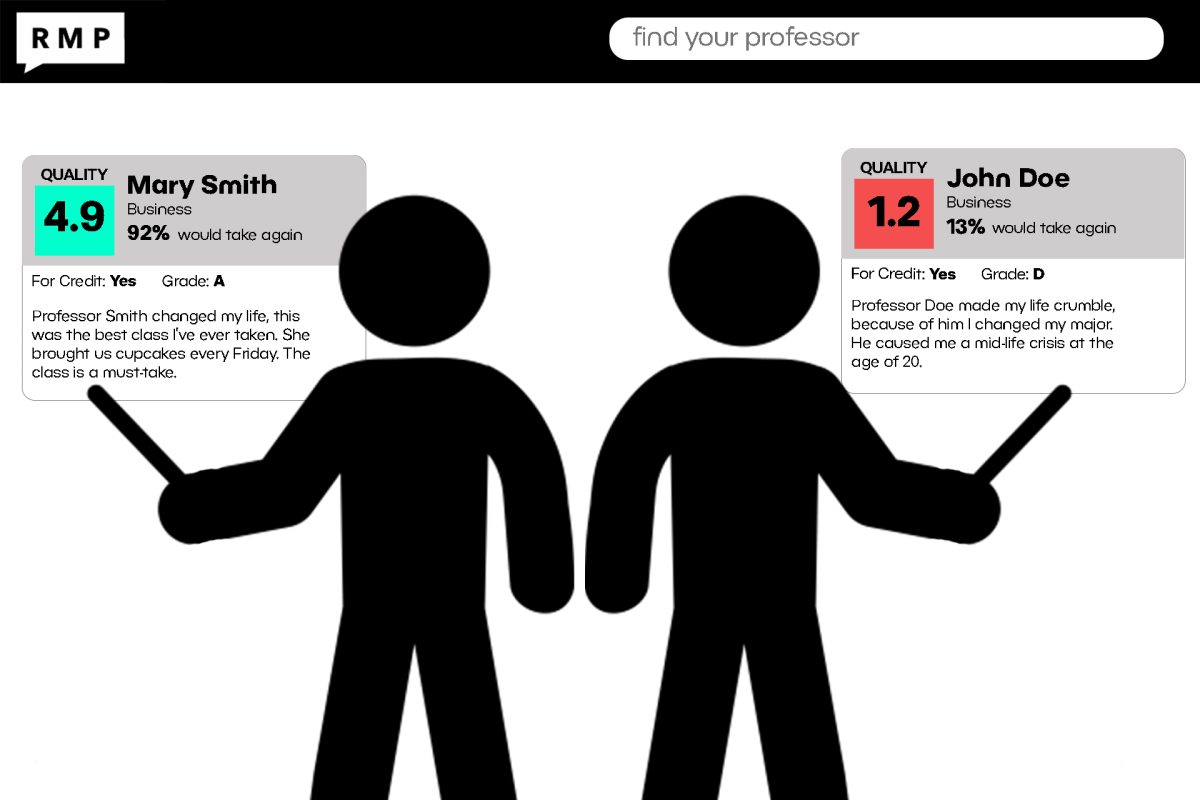

RateMyProfessors allows college students to publicly rate and review their instructors on a five-point scale for overall quality and course difficulty. The platform hosts over 19 million ratings of professors nationwide, but the site’s anonymity and informal structure have sparked an ongoing debate over its fairness and accuracy.

For some students, it’s the first stop during the registration process. A way to gauge which classes are inspiring, which are tedious, and which are just waiting to wreck a GPA. The site’s candid tone and accessibility make the stop feel worthwhile, but it can easily veer into exaggeration. After all, the university conducts its own end-of-course evaluations. These forms, hidden from student view and reserved for administrative eyes, surely weed out the intense instructors – right?

For perspective, the discussion began with Jason Furrer, an associate teaching professor in the School of Medicine whose RateMyProfessors average is 4.9 out of 5 stars. Reviewers tagged his profile with comments like “hilarious,” “caring” and “accessible outside class.” Yet Furrer gently reminds us that RateMyProfessors doesn’t count towards his professional record, and it’s only one piece of a uniquely dynamic picture.

The platform offers students a rare outlet to anonymously share candid feedback, something they might be reluctant to do face-to-face, especially in academic settings where students are surrounded by authoritative figures. Because it’s public, the feedback imprints on future students, influencing decisions in a way university surveys typically don’t.

Furrer acknowledges how RateMyProfessors functions as a sounding board, and he sometimes checks his profile after reading his official evaluations to see if the “public” impression matches internal feedback. However, he cautions that it’s “a discussion board … you can put up whatever you want.” In other words, the platform is useful, but a little messy and unregulated.

He highlighted that students tend to check online sources before choosing a class. RateMyProfessors often fills the void left by the evaluations that only instructors and administrators see. Students, he added, sometimes come into his class already expecting a balance of material difficulty and support from him, as suggested on his profile.

One major objection to RateMyProfessors is nonresponse bias, because people with strong opinions – especially those who had negative experiences – are more likely to post. Several academic critiques support this: anonymous self-reporting platforms are vulnerable to exaggeration and selective targeting. Another study showed that RateMyProfessors’s numerous ratings tend to skew more negative compared to end of course surveys.

What’s especially telling is that RateMyProfessors ratings include both the quality and difficulty, which are often correlated because high difficulty can drag down scores. Critics have argued that many RateMyProfessors reviews are really ratings of how fun a class feels rather than how well it teaches.

This isn’t the only bias suggested by the platform’s critics. Gender and discipline bias also seem to emerge in reviews, with female professors sometimes receiving lower ratings, potentially due to student expectations or implicit bias. For instance, an analysis of nearly 9 million RateMyProfessors reviews found that women instructors tend to receive significantly lower overall scores than men across many fields.

Beyond bias, RateMyProfessors does not have a verification system to ensure raters have taken the class, and people who are not students could theoretically post reviews. Their online moderation team, although committed to reviewing all contributions, ultimately cannot verify user’s identities or the honesty of their reviews. That openness and accessibility is part of what makes it powerful. In this sense, public access seems to be a double-edged sword as it also hurts the platform’s reliability.

For Furrer, the new evaluation standard at the University of Missouri’s Assessment Resource Center is more useful than the previous generic forms because they now focus on qualities such as clarity, collaboration and inclusivity. Professors can also include custom prompts: “They have a chance to talk about things beyond just the dots that they fill in,” Furrer said.

Furrer also uses a classroom incentive to encourage survey responses: if 75% of his class completes the anonymous evaluation, he awards bonus points. That buy-in means his feedback data is more robust than one might think.

Yet students never see these evaluations, and many may not know they exist. This invisibility creates a gap: RateMyProfessors offers visibility but lacks rigor, while surveys offer the opposite.

So why not let students see data from past evaluations, so they can be used as a tool alongside, if not in place of, RateMyProfessors?

Furrer is cautiously open, saying, “It certainly would be useful for students to see some of the previous feedback, or just the general numbers … on the other side of the coin, there’s stuff that gets put in there sometimes that’s pretty hurtful.”

He has a point – making those evaluations public could unfairly damage reputations.

Furrer suggests a potential middle ground: short summary metrics, rather than full comment sections, or flags for particularly low scores – not full transparency, but meaningful communication. He also proposes a mechanism for flagged reviews such as a notification and response system for professors if and when accuracy is in question.

Given all that, what’s Furrer’s advice to students navigating professor ratings?

Use multiple sources. Don’t let a single review tip your decision. Ask friends, browse syllabi and check departmental websites.

Watch for recurring themes, not stray complaints or praises. If many students mention over time “unclear grading” or “supportive office hours,” that’s worth noting.

Mind personality clashes. Sometimes a bad review will stem from mismatches in this area, not bad teaching.

Don’t avoid challenges in pursuit of comfort. He jokes: “you could drop this class and take the easier guy next semester – except that’d be me.” Sometimes the course that, in his words, “kicks your butt” is the one you’ll appreciate most.

RateMyProfessors is a vivid, if somewhat messy signal. Official surveys are slow, hidden and structured. Both are beneficial, but don’t worship either one. You can check RateMyProfessors before class registration, but take it with a grain of salt and a readiness to find a class you’ll love – not just one that sounds easy.